Case Study: Skywalker Nexus MaaS Platform – Architecting Governance and Quantitative Control (EVM) for a Core AI Product

This project involved the development and launch of the Minimum Viable Product (MVP) for a next-generation Mobility-as-a-Service (MaaS) application. The primary objective was to disrupt the urban and intercity travel market by leveraging a proprietary Artificial Intelligence (AI/ML) engine that analyzes real-time data from five different transport providers (e.g., train, bus, ride-share).

Juan Manuel

7/2/20258 min read

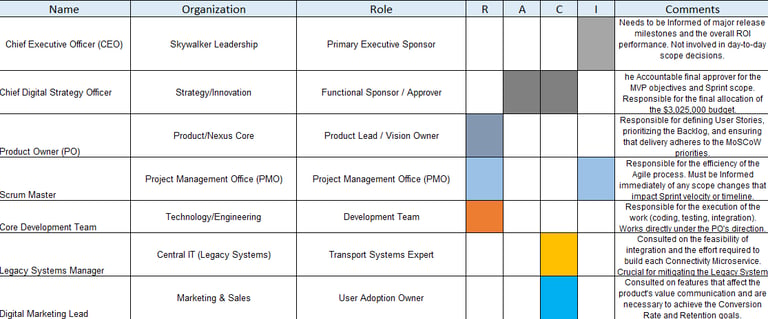

In the Skywalker Nexus MaaS App project, I did not just track tasks; I acted as the Agile Governance Architect—the essential bridge between the technical complexity (AI/APIs) and the financial accountability (EVM). My primary mandate was to ensure the project met its core business objective while diagnosing and correcting critical deviations in efficiency.

Why My Role Was Essential:

Protecting the Investment (The Scope Gatekeeper): The project faced immediate executive pressure for Scope Creep (CR-001). My formal analysis led the Change Control Board to defer the request, directly saving the MVP from a projected 4-week delay and significant cost overrun. My role was to provide the data that enabled management to say "no" to a costly distraction.

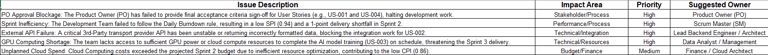

Forcing Accountability (The Data Translator): I moved the conversation from subjective opinions to objective data. By utilizing EVM and establishing a formal Issue Log, I translated technical blockers (like the GPU capacity shortage, IS-004) into clear financial risks, forcing the Executive team to make immediate, critical decisions on budget and resources that ultimately saved the final AI model training timeline.

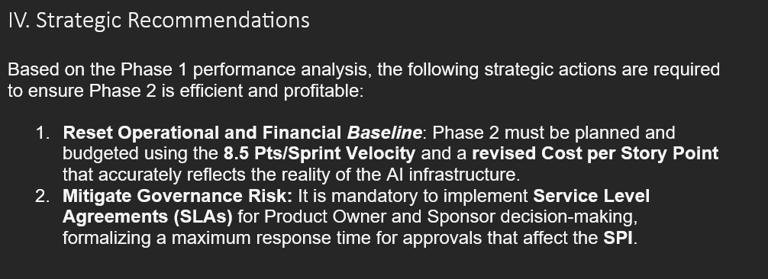

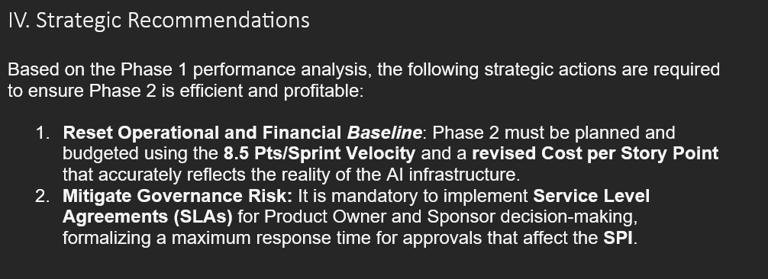

Establishing a New Baseline: My final responsibility was to turn the project’s hard-won lessons into a repeatable process. I delivered not just an app, but a validated operational baseline for the CEO to use in confidently budgeting and scheduling Phase 2.

In essence, I was the process firewall that defended the team’s focus, diagnosed performance flaws using quantitative metrics, and ensured that the initial business promise was delivered, despite internal and external volatility.

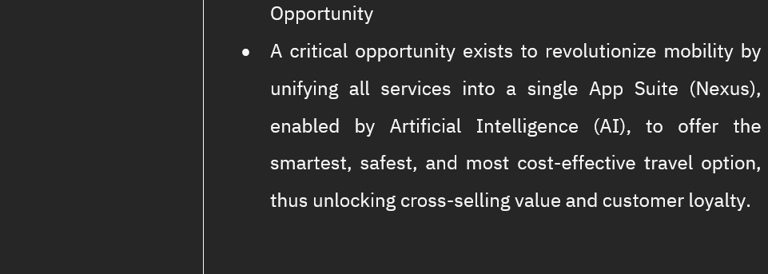

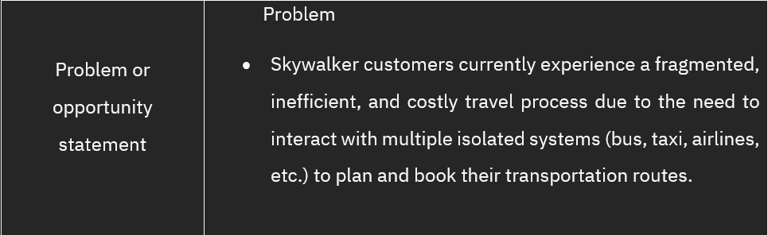

Role

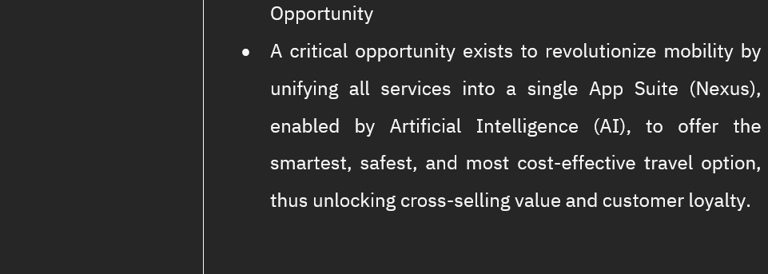

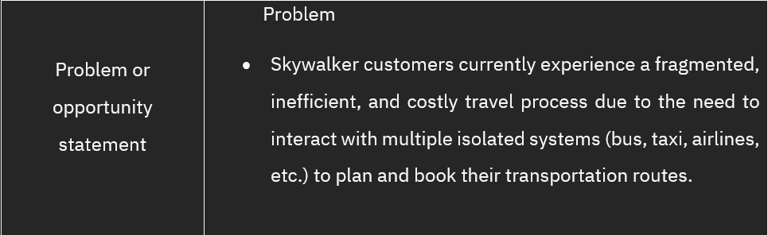

The modern mobility sector is plagued by operational friction. Users are forced to navigate multiple fragmented applications (e.g., separate apps for train, taxi, bus, and air travel) to piece together a single complex journey. This inefficiency translates directly into higher consumer costs and a poor, inconsistent customer experience.

Current solutions lack the dynamic capability to synthesize real-time pricing and schedule data across all major transport modes, resulting in sub-optimal route recommendations and financial loss for the average daily commuter.

The Skywalker Nexus MaaS App was initiated to seize this market gap. The core business hypothesis was that by utilizing a proprietary Machine Learning (ML) engine to consolidate all scheduling, pricing, and booking data onto a single platform, we could create a clear competitive advantage.

Background

Project Goals

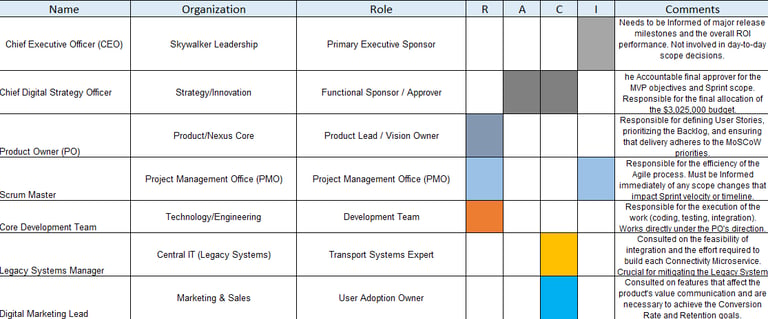

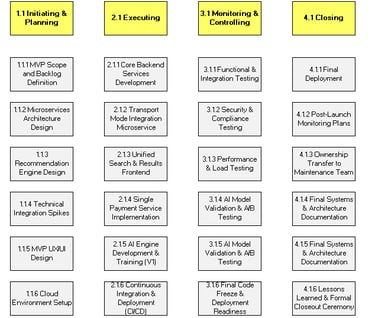

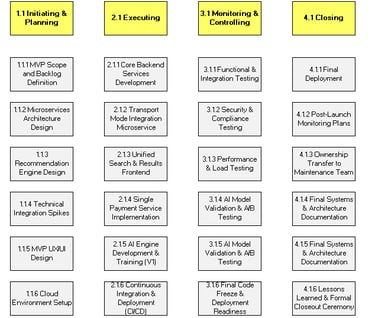

The project utilized a Scrum Agile methodology to maximize flexibility and rapid response to technical unknowns common in AI and API integration projects.

Sprints: Two-week Sprints were implemented to maintain a consistent rhythm and deliver value incrementally.

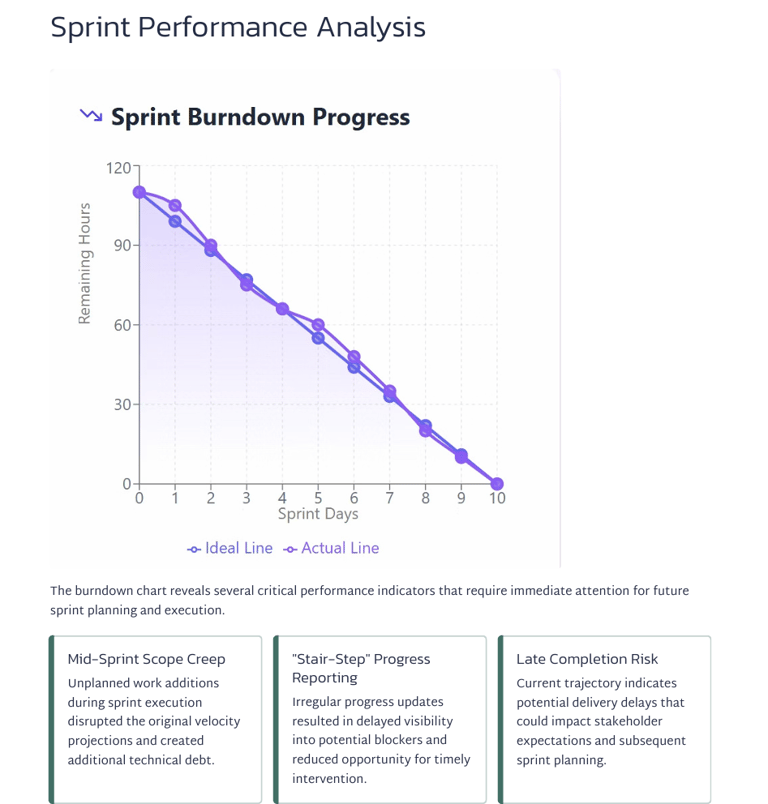

Daily Control: Enforcement of a mandatory Daily Burndown tracking linked to the 8-Hour Micro-Task Rule was introduced mid-project to address systematic performance lag.

B. Governance Framework: Quantitative Control (EVM)

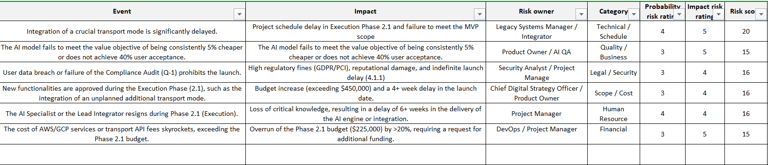

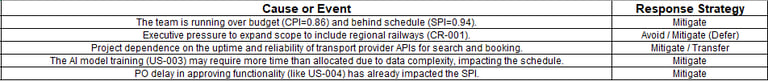

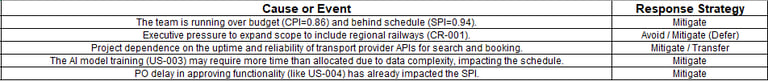

To mitigate the high risk inherent in a critical strategic investment, standard Agile was augmented with formal PMI/EVM Governance focused on data-driven control.

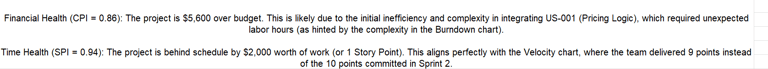

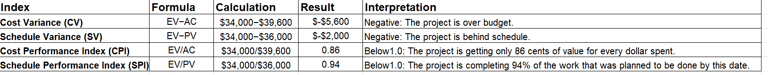

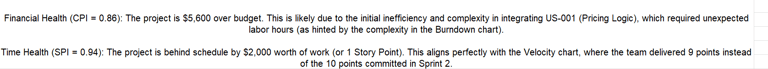

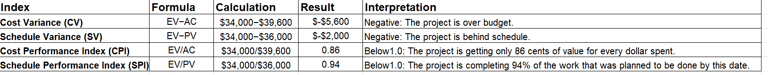

Performance Diagnosis: Weekly tracking of Cost Performance Index (CPI) and Schedule Performance Index (SPI) was used to provide an objective, real-time "Health Check" (Traffic Light Reporting) directly to the Executive Sponsor.

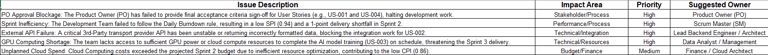

Risk Escalation: All issues and materialized risks were formally funneled through the Issue Log and Risk Register, translating technical problems (e.g., API downtime) into quantifiable financial and schedule impacts for executive decision-making.

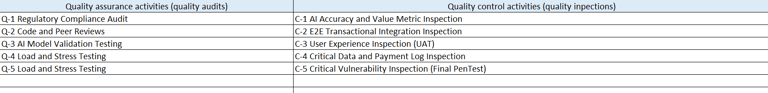

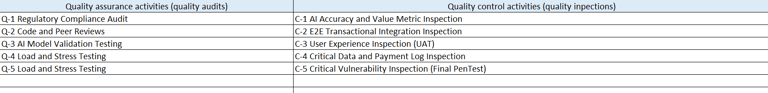

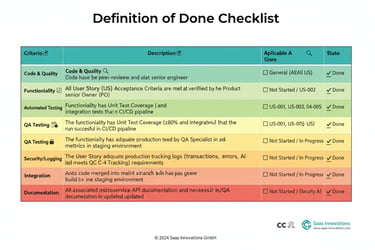

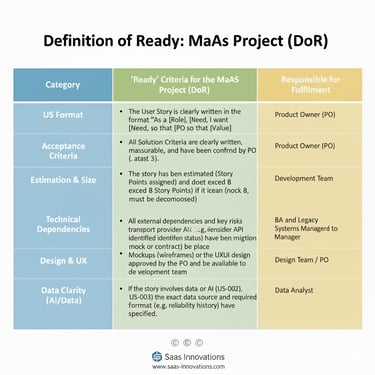

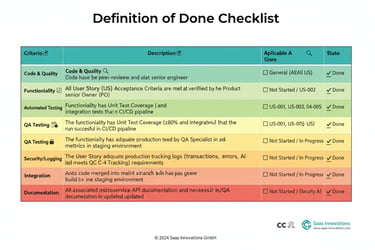

C. Quality and Scope Protection

To ensure the team only worked on stable, high-value tasks, two governance gates were strictly enforced:

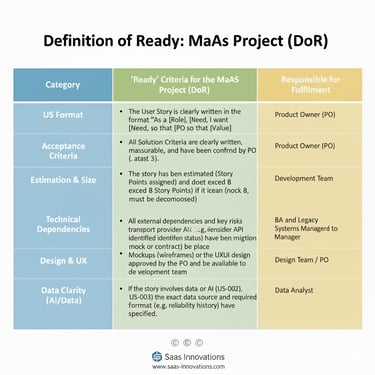

Definition of Ready (DoR): Ensured that no User Story entered a Sprint without final PO approval on Acceptance Criteria (a key intervention to mitigate future approval delays like IS-001).

Definition of Done (DoD): A rigorous set of criteria—including mandatory test coverage and peer code review—was enforced to maintain high quality, successfully eliminating the need for bug-fix Sprints.

Approach

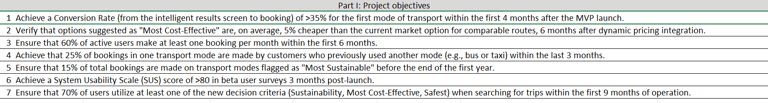

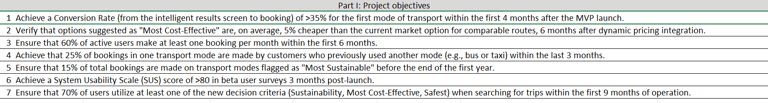

The project's success was measured against a dual set of objectives: Strategic Business Value (validating the core AI function) and User Adoption/Quality (ensuring market fit and usability).

A. Strategic Business Value & AI Performance

These objectives were critical for validating the company’s investment thesis and competitive advantage.

Core Competitive Edge (AI Performance): Verify that options suggested as "Most Cost-Effective" are, on average, 5% cheaper than the current market option for comparable routes, 6 months after dynamic pricing integration.

Sustainability Goal: Ensure that 15% of total bookings are made on transport modes flagged as "Most Sustainable" before the end of the first year.

B. User Adoption & Retention

These metrics confirm that the product is generating utility, encouraging new behaviors, and retaining its user base.

Conversion Rate: Achieve a Conversion Rate (from the intelligent results screen to booking) of >35% for the first mode of transport within the first 4 months after the MVP launch.

Booking Frequency (Retention): Ensure that 60% of active users make at least one booking per month within the first 6 months.

Cross-Mode Adoption: Achieve that 25% of bookings in one transport mode are made by customers who previously used another mode (e.g., bus or taxi) within the last 3 months.

AI Acceptance: Achieve a user acceptance rate for the "Suggested Option (Customer-Tailored)" of >40%, measured 6 months after the feature launch.

C. Usability & Feature Utilization

These objectives confirm the quality of the user experience and the successful utilization of the new smart features.

Usability Score: Achieve a System Usability Scale (SUS) score of >80 in beta user surveys 3 months post-launch.

Feature Utilization: Ensure that 70% of users utilize at least one of the new decision criteria (Sustainability, Most Cost-Effective, Safest) when searching for trips within the first 9 months of operation.

Tools and technology used

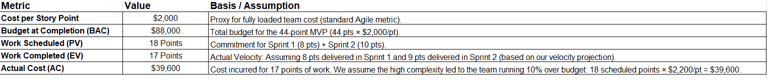

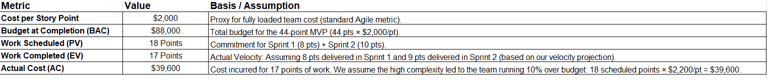

Microsoft Excel / Google Sheets: Earned Value (EVM) modeling and weekly SPI/CPI analysis to diagnose the project's financial performance and dashboards.

Trello: Management of the Product Backlog, Sprints, and workflow control for User Stories.

MS Word: Documentation of Governance and key knowledge for project closure.

Visio/Miro: Creation of the Precedence Diagram Method (PDM) and data flow diagrams for the AI model.

Quantitative Control: Earned Value Management (EVM) Dashboard (Graph of actual project performance, showing CPI/SPI deviation).

Structural Planning: WBS (Work Breakdown Structure) and/or Precedence Diagram (Diagram of the task structure).

Actions/Implementations

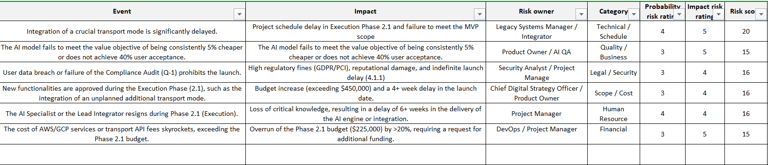

Upon receiving the Scope Creep request (CR-001 - Regional Railways expansion), I led the formal Impact Analysis and presentation to management at the Change Control Board (CCB). My analysis demonstrated that immediate acceptance would result in a 4 week delay and a $40,000 cost overturn. Management formally decided to Defer the change, saving the MVP schedule and defending the scope focus.

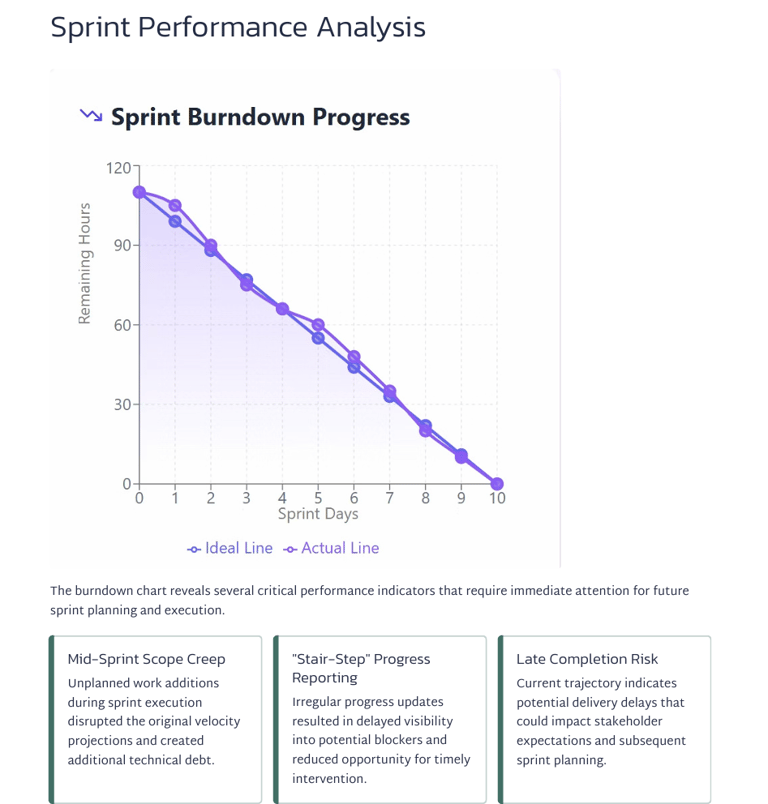

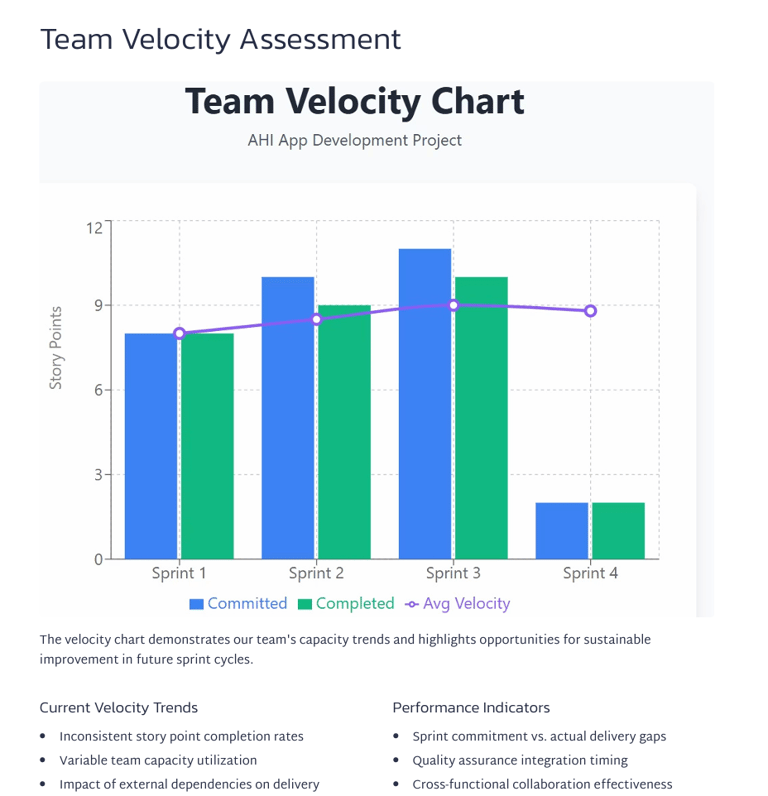

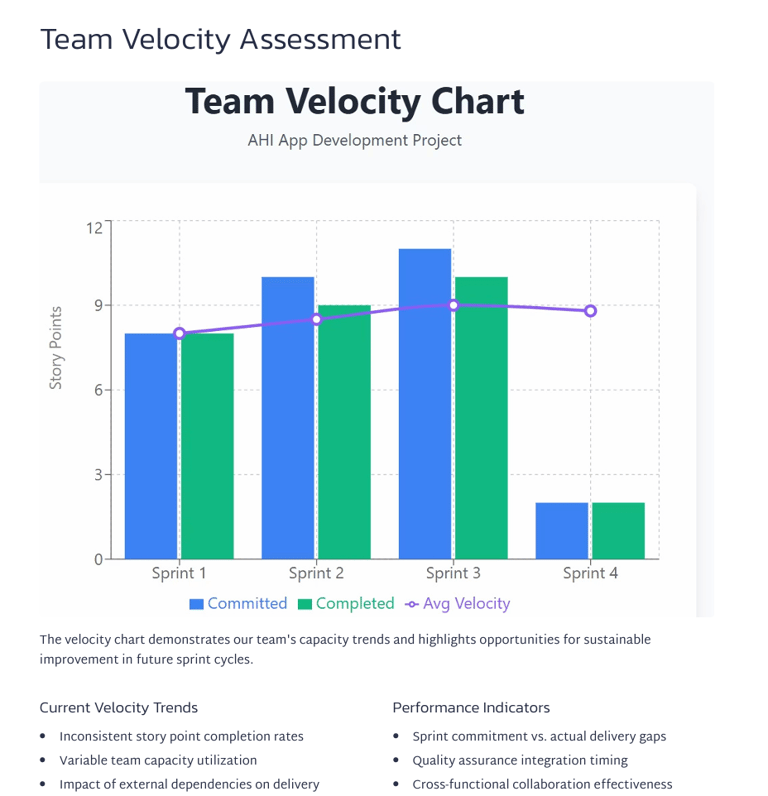

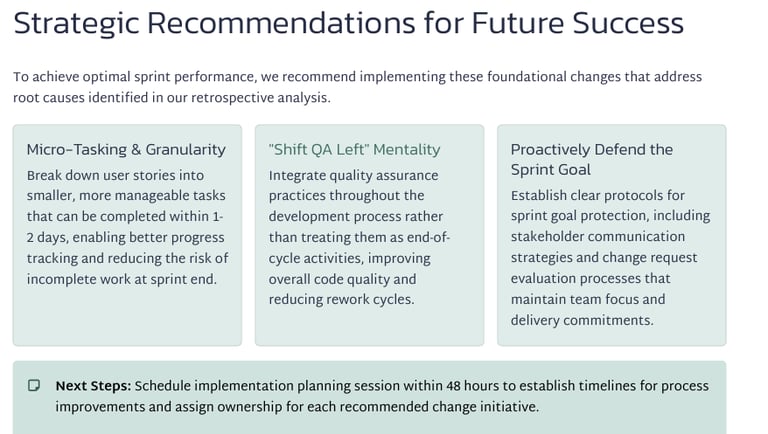

After diagnosing low efficiency (0.83 CPI) and the "stair-step" effect on the Burndown Chart, I implemented a mandatory Retrospective Action Plan: the "Micro-Task Rule (<8 hours)" and the "Day 8 QA Deadline. This discipline enforced more precise estimation and improved effort predictability. The team successfully stabilized the 8.5 pts/sprint velocity, ensuring the key AI functionality could still be delivered on time.

Results/Impact

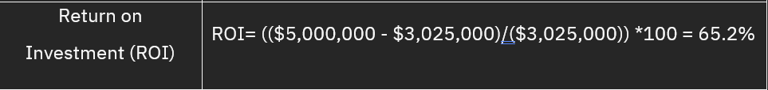

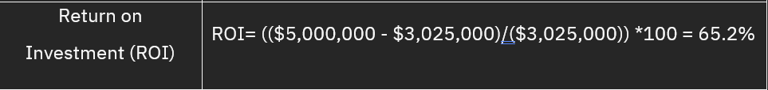

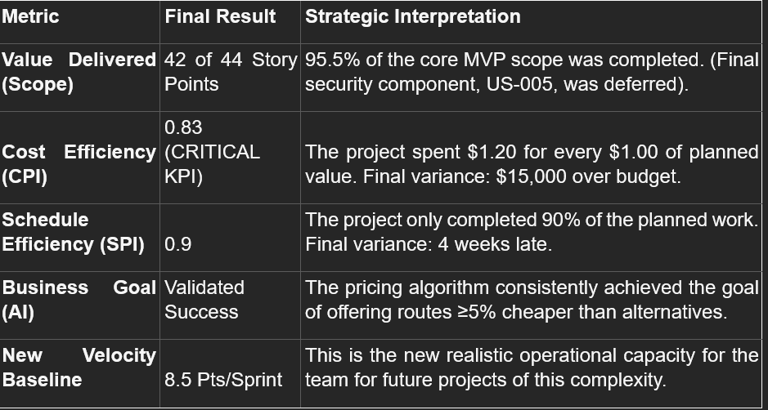

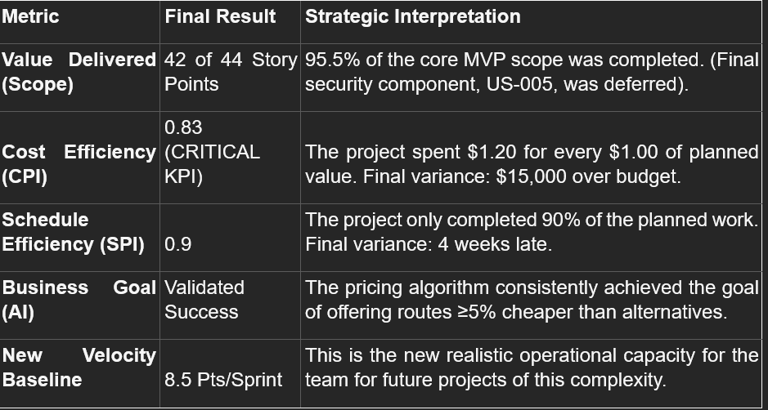

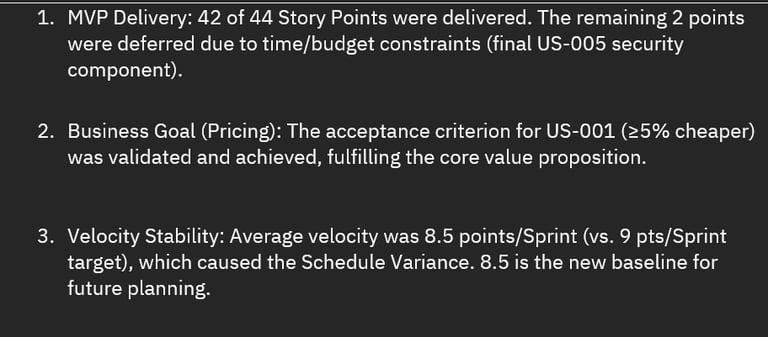

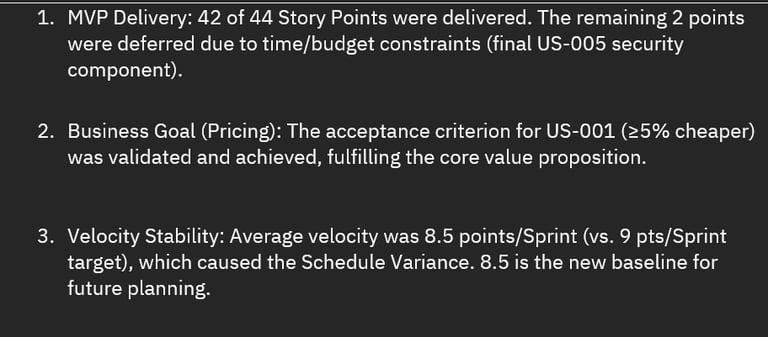

This section validates the business investment and demonstrates that the product's Value Thesis was achieved despite operational inefficiencies. The Earned Value Management (EVM) analysis served as the ultimate diagnostic tool.

Strategic and business impact

Market Thesis Validation (AI): The final product validated its market thesis, consistently achieving the user savings goal of >5% cost reduction. This establishes the business's primary Competitive Advantage for Phase 2.

Launch Quality: The strict enforcement of the DoD resulted in zero bug-fix Sprints. The launch was stable, keeping technical debt low and minimizing post-launch support costs.

Scope and Delivery: The project closed at 95.5% of the planned scope (42 out of 44 Story Points), which was a success considering the Scope Creep risk that was proactively mitigated.

Quantitative performance

Cost Efficiency: High Variance: The project spent $1.20 for every $1.00 of value earned ($15,000 over budget). The expected result given the GPU infrastructure underestimation.

Schedule Efficiency (SPI): The project finished with a 4 week delay. The corrective action was to establish the 8.5 Pts/Sprint Velocity as the new realistic performance baseline for the next project.

AI Profitability: Value Thesis Validated.

Lessons learned

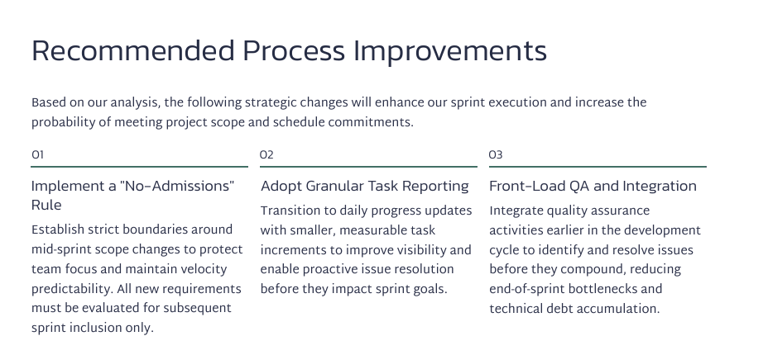

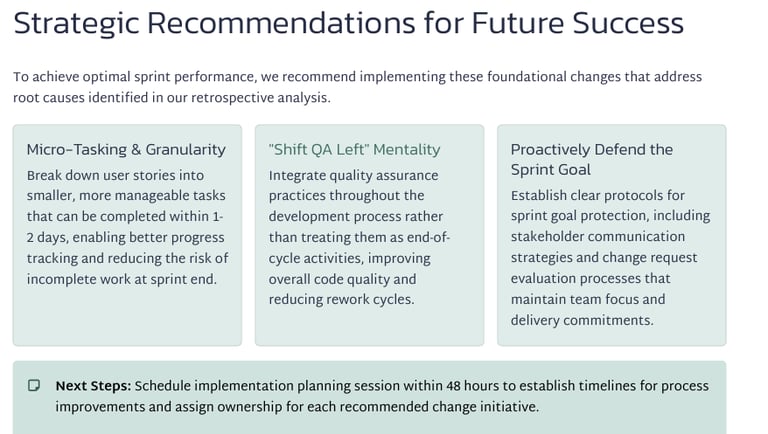

The project's key value lies not just in the product delivered, but in the operational lessons learned under pressure. These insights transform failure points into repeatable processes for future high-risk, high-reward AI projects.

What worked well

Defending Quality via the DoD: The strict enforcement of the Definition of Done (DoD), including >80% test coverage, successfully protected the project from technical debt. This discipline was the primary driver for zero bug-fix Sprints, directly saving post-launch costs.

Data-Driven Issue Escalation: The formal use of the Issue Log and Risk Register allowed for rapid diagnosis and resolution of critical blockers. By linking GPU shortages (IS-004) to the SPI, I ensured executive intervention was timely and effective.

Value focus: The team maintained laser focus on the core business goal (the >5% cost-savings AI), ensuring the MVP was truly viable and competitive.

What needs to be improved

Stakeholder Governance Immaturity: The primary cause of the SPI lag was the delay in PO Approvals (IS-001). This highlights the need to implement formal Service Level Agreements (SLAs) for decision-makers in the Definition of Ready (DoR) to protect the team's capacity.

AI Infrastructure Estimation: The 0.83 CPI indicates a significant underestimation of the time and cost required for the Cloud Compute/GPU setup. Future AI projects must allocate an entire initial Discovery/Spike Sprint dedicated only to infrastructure Proof of Concept (PoC).

Burndown/Velocity Discipline: The team lacked daily discipline, resulting in the "stair-step effect." This will be resolved by institutionalizing the Micro-Task Rule (<8 Hours) in the next project to enforce estimation accuracy and improve management's planning confidence.

Connect

© 2025. All rights reserved.